Blog

15/07/2025

JUPITER: Europe’s first exascale supercomputer

By Ilya Zhukov (Jülich Supercomputing Centre – JSC)

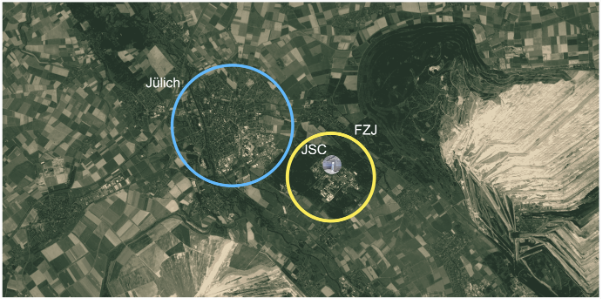

Big things are happening near a small town in Germany – but on a European scale. We’re talking about JUPITER, Europe’s first exascale supercomputer [1, 2], currently being commissioned near the quiet town of Jülich. With its launch, Europe is entering the global exascale arena.

Jülich may not be on most tourist maps, but it’s long been a hub of science and innovation. It’s home to Forschungszentrum Jülich (in English “Research Center Jülich”), one of Europe’s largest research centers, with a long-standing reputation in neurobiology, climatology, quantum technologies, and, of course, high-performance computing. So, it’s no surprise that the hosting site there, the Jülich Supercomputing Centre (JSC), was chosen as the home of JUPITER.

Map showing Jülich (blue), Forschungszentrum Jülich – FZJ (yellow), and Jülich Supercomputing Centre – JSC (inside yellow circle). The white area on the map shows nearby coal mines. (Source: Google Maps)

What exactly is JUPITER?

Forschungszentrum Jülich has a naming tradition: nearly everything developed there starts with “JU”. From supercomputers like JUQUEEN, JURECA, and JUWELS, to Justin (the first beaver at the campus lake – not related to any pop stars) and JuBräu (a group of doctoral students at the Forschungszentrum Jülich, who brew beer as a hobby alongside their research).

The name JUPITER (Joint Undertaking Pioneer for Innovative and Transformative Exascale Research) – Europe’s first exascale supercomputer and the largest planet in the solar system – fits perfectly. It continues the “JU” legacy while reflecting the system’s massive scale and ambition.

JUPITER is capable of performing over one quintillion floating point operations per second (1 ExaFLOPS, or 1 followed by 18 zeros). For comparison PlayStation 5 delivering a theoretical peak performance of 10.28 trillion FLOPS (10.28 x 10^12) [3]. This immense computational power makes JUPITER ideally suited for modeling large, complex systems -ranging from global climate simulations and particle physics to AI model training and beyond.

JUPITER’s architecture

Unlike traditional supercomputers, JUPITER uses a modular architecture, built by the French-German consortium Eviden-ParTec.

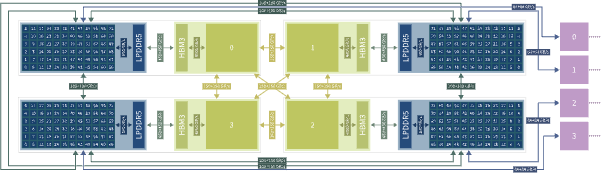

- Booster module: This is where the heavy lifting happens. JUPITER’s booster module is designed for massive, parallel workloads, like AI training or large-scale simulations.

- 125 racks and around 6,000 nodes will feature around 24,000 NVIDIA GH200 Grace Hopper superchips (combining an Arm CPU and Hopper GPU).

- Allows to reach over 40 ExaFLOP/s in lower-precision dense 8-bit calculations. This performance can be achieved within a power budget of around 17 megawatts.

- Cluster module: The general-purpose cluster module is intended for workloads that need high memory bandwidth but don’t rely on accelerator-based computing. It will be the first system to use the Rhea1 processor, developed by the European startup SiPearl as part of the European Processor Initiative. The Cluster will be installed a few months after the Booster Module.

Node diagram of the 4× NVIDIA GH200 node design of JUPITER Booster

Where do you even put the JUPITER?

You don’t just put a supercomputer into an empty machine hall and call it a day. That’s where the Modular Data Centre (MDC) comes into the play [4]. The MDC is a 2,300 square meter facility made up of around 50 prefabricated, interchangeable containers.

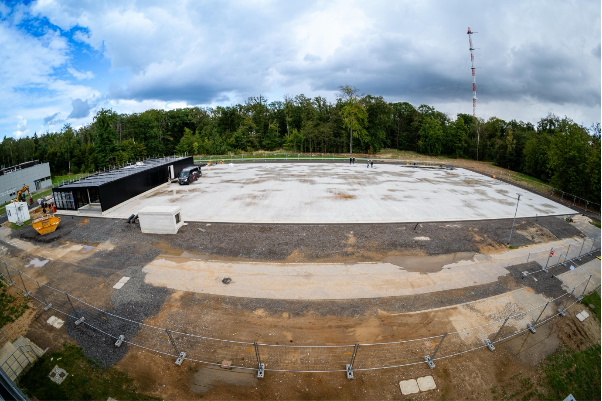

Initial phase of the Modular Data Centre (MDC) construction in Jülich – the foundation laid for a future HPC facility (source Herwig Zilken / FZJ)

What’s inside the MDC?

Around 50 containers, including:

- 15 energy modules: each capable of supplying 2.5 megawatts of power.

- 4 storage modules: houses the 29-petabyte ExaFLASH, 308-petabyte ExaSTORE, and the JUPITER Service Island, including the 3.2 terabit-per-second network connection to the outside world.

- 7 double IT modules: each containing up to 20 Eviden BullSequana XH3000 racks, with 125 racks for JUPITER Booster and up to 15 racks for JUPITER Cluster. An 8th IT Module is planned for future use for additional initiatives, such as for the JUPITER AI Factory system (JARVIS). These modules come ready to go – already built and tested by Eviden. They use liquid cooling to stay efficient and quiet, so they can handle tough jobs without making a lot of noise.

- Around 10 logistics modules with workshops, warehouses, and other units.

The Modular Data Centre (MDC) nearing completion – most modules installed, preparing to host Europe’s first exascale supercomputer, JUPITER (source Herwig Zilken / FZJ)

What about energy efficiency?

Waste heat generated during cooling can be decoupled and used to heat the buildings on the Jülich campus; connection to the heating network should cover a substantial proportion of the heating requirements at Forschungszentrum Jülich in the medium term.

Who’s behind all this?

A project this big takes a serious team effort:

Built by: Eviden (Atos Group)

Owned by: EuroHPC Joint Undertaking

Operated by: Jülich Supercomputing Centre (JSC)

Total investment: Around €500 million over six years

Funded by:

- 50% European Union

- 25% German Federal Ministry of Education and Research (BMBF)

- 25% Ministry of Culture and Science of North Rhine-Westphalia (MKW NRW)

Not just hardware – a complete HPC ecosystem!

JUPITER will not arrive as just bare metal. Users can expect the full software stack, i.e. compilers, MPI, numerical libraries, debuggers, performance analysis tools, etc.

But software is only half the story. A dedicated support team will be on hand to assist users with porting, tuning, and troubleshooting – including domain experts who speak your (scientific) language. Training will also be provided, ranging from introductory to more advanced levels.

If something’s missing – don’t worry. The environment will evolve with the needs of its users, and adjustments can be made as necessary. After all, this is not just about building a machine – it’s about enabling science.

I’m impressed: can I use it?

Yes, access to JUPITER is available through established allocation programs, depending on your institutional affiliation.

Researchers with an affiliation in Germany may apply for computing time through the Gauss Centre for Supercomputing (GCS), under either the Regular or Large Scale Project calls. [More information, submission deadlines, and application procedures.](https://www.gauss-centre.eu/for-users/hpc-access)

Researchers affiliated with institutions in EU Member States or countries associated with Horizon 2020 can apply via the EuroHPC Joint Undertaking Access Calls. These include calls for regular, extreme scale, development, benchmarking, and AI accesses. [More information, submission deadlines, and application procedures.](https://eurohpc-ju.europa.eu/supercomputers/supercomputers-access-calls_en)

Eligibility criteria and review processes apply in both cases. Consult the respective websites and support teams for guidance.

How does it relate to the EPICURE project?

Users who apply for computing time on JUPITER through a EuroHPC access call can also request support from the EPICURE project, such as code porting and optimisation, performance analysis and debugging, workflow scaling and adaptation for JUPITER, and many more. Just specify this in you application!

Final thought

JUPITER is more than a supercomputer. It’s a milestone in European science and technology, and a tool that will help researchers across Europe do their best work.

References

[1] http://jupiter.fz-juelich.de/

[2] https://www.fz-juelich.de/en/ias/jsc/jupiter/faq